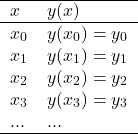

Suppose that you have a table or array of the values of a function

One can perform a linear interpolation\ to obtain ![]() for

for

![]() by assuming that the curve is well approximated by a straight line between adjacent points listed in the table

by assuming that the curve is well approximated by a straight line between adjacent points listed in the table

![]()

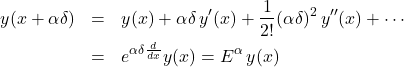

If the points ![]() are evenly spaced with

are evenly spaced with ![]() , the Lagrange interpolation can provide better accuracy. Notice that

, the Lagrange interpolation can provide better accuracy. Notice that

![]()

in which ![]() is the shift operator

is the shift operator

![]()

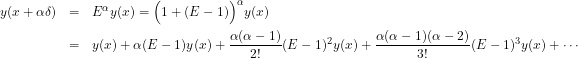

Then

(1)

which we can expand as

(2)

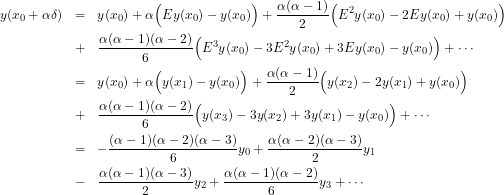

Collect terms together and use the fact that

![]()

then

(3)

and so on, here correct to third order differences. Notice that if ![]() , for

, for ![]() , we simply obtain

, we simply obtain ![]() . One would use this to get

. One would use this to get ![]() for

for ![]() in practice, given a set of

in practice, given a set of ![]() -values for regularly-spaced

-values for regularly-spaced ![]() -values, giving a significant improvement over linear interpolation. It can be used to develop predict-correct numerical integration algorithms (which is why we are interested in it). For example consider a function

-values, giving a significant improvement over linear interpolation. It can be used to develop predict-correct numerical integration algorithms (which is why we are interested in it). For example consider a function ![]() and its derivative

and its derivative ![]() , and apply this to the case

, and apply this to the case ![]() and

and ![]() instead of

instead of ![]() , we obtain

, we obtain

![]()

Now consider numerically integrating ![]() from

from ![]() to

to ![]() by Simpson’s rule

by Simpson’s rule

![]()

and into this insert the previous interpolation formula to eliminate ![]() on the right side to obtain the interpolation

on the right side to obtain the interpolation

![]()

Bashford-Adams-Milne integration is a predict-correct method based on the interpolation formula that we just derived. Consider a differential\equation

![]()

and make a guess solution ![]() that satisfies the boundary conditions (say

that satisfies the boundary conditions (say ![]() ) and build up a table from the guess solution. From the derivatives of the guess solution make the predictions

) and build up a table from the guess solution. From the derivatives of the guess solution make the predictions

![]()

then compute from these new values of the derivatives from

![]()

and then correct the prediction using Simpson’s rule

![]()

We simply iterate the procedure until desired accuracy is achieved. This method does not suffer from the accumulated errors of Euler. Here is an example in REDUCE for the equation

![]()

on rounded;

operator x,y,yp;

delta:=0.01;

for n:=0:100 do x(n):=n*delta;

% remember y'=!F

!F:=1.0+!Y^2;

y(0):=0.0;

% First guess

for n:=1:100 do y(n):=x(n);

for n:=0:100 do yp(n):=1.0;

% Predictor

% Iterate predict/correct cycle

for m:=0:10 do <<

for n:=4:100 do y(n):=y(n-4)+(8.0*delta/3.0)*(yp(n-3)-0.5*yp(n-2)+yp(n-1));

% Recompute y'

for n:=0:100 do yp(n):=sub(!Y=y(n), !F);

% Correct (note we DON't mess with y(0))

for n:=2:100 do y(n):=y(n-2)+(delta/3.0)*(yp(n-2)+4.0*yp(n-1)+yp(n)) >>;

% compare to exact solution

for n:=0:100 do write y(n), " ", tan(x(n));Note that ten cycles gives excellent accuracy (![]() versus

versus ![]() ).

).