Let ![]() be a symmetric matrix (this is rather important). Perform a

be a symmetric matrix (this is rather important). Perform a ![]() decomposition

decomposition ![]() , and create a new matrix

, and create a new matrix

![]()

note that the order of the factors is reversed. It was noted that the off-diagonal matrix elements shrink in the process, but of course the eigenvalues and determinant are unchanged, since

![]()

and we know that orthogonal transformations do not alter the eigenvalues, and a matrix can be diagonalized by repeated orthogonal transformation.

Now ![]() decompose

decompose ![]() into

into ![]() and construct

and construct

![]()

Then

![]()

This process can be continued, and under the right set of conditions (if ![]() was a symmetric matrix), one eventually reaches a transformed

was a symmetric matrix), one eventually reaches a transformed ![]() that is approaching a diagonalized or triangularized state, as the off-diagonal (above, below the main diagonal or both) elements shrink to below machine precision. This is one of the most powerful and general diagonalization algorithms ever discovered.

that is approaching a diagonalized or triangularized state, as the off-diagonal (above, below the main diagonal or both) elements shrink to below machine precision. This is one of the most powerful and general diagonalization algorithms ever discovered.

The QR algorithm for eigenvalue extraction for a symmetric matrix is a repeated ![]() decomposition followed by a matrix multiplication

decomposition followed by a matrix multiplication

![]() Perform the

Perform the ![]() decomposition of

decomposition of ![]()

![]() create

create ![]()

![]() Perform the

Perform the ![]() decomposition of

decomposition of ![]()

![]() create

create ![]()

![]() Continue this process:

Continue this process: ![]() and stop the process when the matrix elements of

and stop the process when the matrix elements of ![]() below the main diagonal are smaller than

below the main diagonal are smaller than ![]() , or after

, or after ![]() iterations.

iterations.

# in julia, using CUDA to load onto the gpu # use the QR decomposition in the LinearAlgebra library using LinearAlgebra using CUDA # try it on a 256x256 array b=rand(256,256); b=b+b'; B=CuArray(b); for j in 1:600 QR=qr(B) B=QR.R * CuArray(QR.Q) end collect(B[j,j] for j in 1:256) # Full eigenvalue extraction to 64 bits in 28 seconds on jetson nano

The only way to speed this up is to do it on the gpu, or to diminish the size of the matrix as you procede (deflation), or to improve convergence by shifting. Let ![]() be the unit matrix

be the unit matrix

![]() Create an empty list of eigenvalues.

Create an empty list of eigenvalues.

![]() Let

Let ![]() be the lower right corner diagonal entry of

be the lower right corner diagonal entry of ![]() and

and ![]() be the unit matrix. Perform the

be the unit matrix. Perform the ![]() decomposition of

decomposition of ![]()

![]() Make

Make ![]()

![]() Let

Let ![]() be the lower right corner diagonal entry of

be the lower right corner diagonal entry of ![]() . Perform the

. Perform the ![]() decomposition of

decomposition of ![]()

![]() Make

Make ![]()

![]() Continue this process until you get to some level

Continue this process until you get to some level ![]() such that all matrix elements in the last row of

such that all matrix elements in the last row of ![]() except for the one on the diagonal, the rightmost element, are smaller than

except for the one on the diagonal, the rightmost element, are smaller than ![]() . Then add this last row rightmost element of

. Then add this last row rightmost element of ![]() to the list of eigenvalues.

to the list of eigenvalues.

![]() Form

Form ![]() by removing the last row and column from

by removing the last row and column from ![]() , and repeat the whole

, and repeat the whole

process on the smaller array.

![]() Do this until the array is deflated to a

Do this until the array is deflated to a ![]() , then add its only element to the eigenvalue list.

, then add its only element to the eigenvalue list.

# Implementation in R using Rmpfr multiple precision arrays

eigenval <- function(M,precision,iters){

N <- ncol(M)

cp <- Rmpfr::mpfrArray(rep(0,N^2),prec=64, dim = c(N, N))

cp <- M

eigs <- c()

for (j in 1:iters){

if (N==1){eigs <- c(eigs,cp)

break}

c<- cp[N,N]

for (k in 1:N){cp[k,k]<- cp[k,k]-c}

qr <- QR(cp)

cp <- qr q

for (k in 1:N){cp[k,k]<- cp[k,k]+c}

if (max(abs(cp[N,-N]))<=precision) {

eigs <- c(eigs,cp[N,N])

cpn <- Rmpfr::mpfrArray(rep(0,(N-1)^2),prec=64, dim = c(N-1, N-1))

cpn<- cp[1:(N-1),1:(N-1)]

cp<- cpn

N<-N-1 }

}

return(eigs)

}

q

for (k in 1:N){cp[k,k]<- cp[k,k]+c}

if (max(abs(cp[N,-N]))<=precision) {

eigs <- c(eigs,cp[N,N])

cpn <- Rmpfr::mpfrArray(rep(0,(N-1)^2),prec=64, dim = c(N-1, N-1))

cpn<- cp[1:(N-1),1:(N-1)]

cp<- cpn

N<-N-1 }

}

return(eigs)

}

Convert this to julia and run it on the gpu, and we diagonalize the ![]() in

in ![]() seconds.

seconds.

Any matrix ![]() can be factored

can be factored

![]()

into the product of an orthogonal matrix ![]() and an upper-triangular matrix

and an upper-triangular matrix ![]() , and Gram-Schmidt points the way. Think of it like this: the rows or columns of a non-singular matrix (one whose determinant is not zero) are a set of linearly independent vectors. These vectors can be transformed into a set of orthogonal unit vectors via GS.

, and Gram-Schmidt points the way. Think of it like this: the rows or columns of a non-singular matrix (one whose determinant is not zero) are a set of linearly independent vectors. These vectors can be transformed into a set of orthogonal unit vectors via GS.

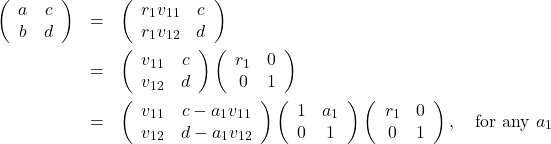

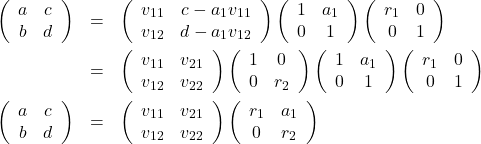

Let’s do it then: start out with two linearly independent vectors ![]() and

and ![]() and create a matrix

and create a matrix ![]() . Lets pick

. Lets pick ![]() and normalize it,

and normalize it, ![]() and use the result as

and use the result as ![]()

![]()

Note that

(1)

so we pick ![]() . Note that the second column of the new matrix is

. Note that the second column of the new matrix is ![]() to the first column, so it must be

to the first column, so it must be ![]() , where

, where ![]()

(2)

We began with an arbitrary matrix ![]() and decomposed it into a product of an orthogonal matrix

and decomposed it into a product of an orthogonal matrix ![]() (made of orthogonal unit vectors

(made of orthogonal unit vectors ![]() ) and an upper-triangular matrix

) and an upper-triangular matrix ![]() .

.

Let’s do it for three linearly independent vectors ![]() ,

, ![]() ,and

,and ![]() , and make a matrix

, and make a matrix ![]() out of them

out of them

![Rendered by QuickLaTeX.com \[M=(\mathbf{u}_1, \mathbf{u}_2, \mathbf{u}_3)=\left(\begin{array}{ccc} u_{11} & u_{21} & u_{31}\\ u_{12} & u_{22} & u_{32}\\ u_{13} & u_{23} & u_{33}\end{array}\right)\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-f2ad7f61a5febbd4d2dd06af46368152_l3.png)

Stage 0. Let ![]() , then

, then

![Rendered by QuickLaTeX.com \[M=(\mathbf{u}_1, \mathbf{u}_2, \mathbf{u}_3)=\left(\begin{array}{ccc} v_{11} & u_{21} & u_{31}\\ v_{12} & u_{22} & u_{32}\\ u_{13} & u_{23} & u_{33}\end{array}\right)\left(\begin{array}{ccc} r_1 & 0 & 0\\ 0 & 1 & 0\\ 0 & 0 & 1\end{array}\right)\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-584dbbb5b0293ebbdd89ba2eb89b5229_l3.png)

Stage 1. Let ![]() , then

, then

![Rendered by QuickLaTeX.com \[M=(\mathbf{u}_1, \mathbf{u}_2, \mathbf{u}_3)=\left(\begin{array}{ccc} v_{11} & u_{21}-a_1v_{11} & u_{31}\\ v_{12} & u_{22}-a_1 v_{12} & u_{32}\\ u_{13} & u_{23}-a_1 v_{13} & u_{33}\end{array}\right)\left(\begin{array}{ccc} 1 & a_1 & 0\\ 0 & 1 & 0\\ 0 & 0 & 1\end{array}\right)\left(\begin{array}{ccc} r_1 & 0 & 0\\ 0 & 1 & 0\\ 0 & 0 & 1\end{array}\right)\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-39c3eef8b0a9aaaa82b7bccd0cdd9356_l3.png)

let ![]() , and

, and ![]() ;

;

![Rendered by QuickLaTeX.com \[M=\left(\begin{array}{ccc} v_{11} & v_{21} & u_{31}\\ v_{12} & v_{22} & u_{32}\\ u_{13} & v_{23} & u_{33}\end{array}\right)\left(\begin{array}{ccc} 1 & 0 & 0\\ 0 & r_2 & 0\\ 0 & 0 & 1\end{array}\right)\left(\begin{array}{ccc} 1 & a_1 & 0\\ 0 & 1 & 0\\ 0 & 0 & 1\end{array}\right)\left(\begin{array}{ccc} r_1 & 0 & 0\\ 0 & 1 & 0\\ 0 & 0 & 1\end{array}\right)\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-f6b6bf1c046e809478586fdf09bbcbd9_l3.png)

Final stage, let ![]() , and

, and ![]()

![Rendered by QuickLaTeX.com \[M=\left(\begin{array}{ccc} v_{11} & v_{21} & u_{31}-a_2 v_{11}-a_3 v_{21}\\ v_{12} & v_{22} & u_{32}-a_2 v_{12}-a_3 v_{22}\\ u_{13} & v_{23} & u_{33}-a_2 v_{13}-a_3 v_{23}\end{array}\right)\left(\begin{array}{ccc} 1 & 0 & a_2\\ 0 & 1 & a_3\\ 0 & 0 & 1\end{array}\right)\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-c804792c1d18a24b5da62f1aee635660_l3.png)

![Rendered by QuickLaTeX.com \[\cdot\left(\begin{array}{ccc} 1 & 0 & 0\\ 0 & r_2 & 0\\ 0 & 0 & 1\end{array}\right)\left(\begin{array}{ccc} 1 & a_1 & 0\\ 0 & 1 & 0\\ 0 & 0 & 1\end{array}\right)\left(\begin{array}{ccc} r_1 & 0 & 0\\ 0 & 1 & 0\\ 0 & 0 & 1\end{array}\right)\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-371bab7da8c0a7ade94c93df33133776_l3.png)

Call ![]() , and

, and ![]() ;

;

![Rendered by QuickLaTeX.com \[M=\left(\begin{array}{ccc} v_{11} & v_{21} & v_{31}\\ v_{12} & v_{22} & v_{32}\\ u_{13} & v_{23} & v_{33}\end{array}\right)\left(\begin{array}{ccc} 1 & 0 & 0\\ 0 & 1 & 0\\ 0 & 0 & r_3\end{array}\right)\left(\begin{array}{ccc} 1 & 0 & a_2\\ 0 & 1 & a_3\\ 0 & 0 & 1\end{array}\right)\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-50a56ad3afa0be59c5e72b92462a19d6_l3.png)

![Rendered by QuickLaTeX.com \[\cdot\left(\begin{array}{ccc} 1 & 0 & 0\\ 0 & r_2 & 0\\ 0 & 0 & 1\end{array}\right)\left(\begin{array}{ccc} 1 & a_1 & 0\\ 0 & 1 & 0\\ 0 & 0 & 1\end{array}\right)\left(\begin{array}{ccc} r_1 & 0 & 0\\ 0 & 1 & 0\\ 0 & 0 & 1\end{array}\right)\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-371bab7da8c0a7ade94c93df33133776_l3.png)

if you multiply it all out

![Rendered by QuickLaTeX.com \[M=\left(\begin{array}{ccc} v_{11} & v_{21} & v_{31}\\ v_{12} & v_{22} & v_{32}\\ u_{13} & v_{23} & v_{33}\end{array}\right)\left(\begin{array}{ccc} r_1 & a_1 & a_2\\ 0 & r_2 & a_3\\ 0 & 0 & r_3\end{array}\right)\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-cff404965d99ad5ccc395a63c19a498b_l3.png)

a full ![]() decomposition of our original arbitrary

decomposition of our original arbitrary ![]() matrix.

matrix.