Differentiation

We will associate with the point ![]() in the plane a {\bf complex number}

in the plane a {\bf complex number} ![]() , with

, with ![]() . We call

. We call ![]() the complex conjugate of

the complex conjugate of ![]() . The transformation

. The transformation ![]() can be regarded as a simple change of variable. Functions of the form

can be regarded as a simple change of variable. Functions of the form

![]()

in other words, functions of the special combination ![]() of

of ![]() and

and ![]() , are actually functions of a single variable. However for such functions, the path along which we perform the limit in the derivative process is not unique, for example we can write

, are actually functions of a single variable. However for such functions, the path along which we perform the limit in the derivative process is not unique, for example we can write

![]()

or

![]()

and if these two definitions do not produce the same result, we are in deep trouble since calculus will essentially become dysfunctional.

We will refer to any function whose derivative in the complex plane is unique as being analytic.

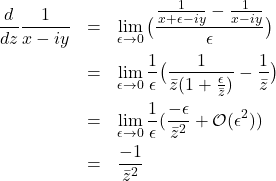

![]()

Proof. What a profound difference a sign can make;

(1)

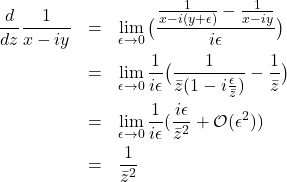

however, if we take the derivative by a different route:

(2)

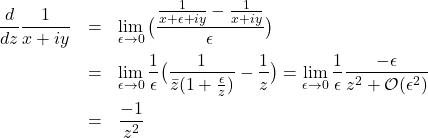

and these two are clearly not the same. Analytic means that the derivative is unique, and independent of the path along which the limit is taken. Notice that ![]() is analytic;

is analytic;

(3)

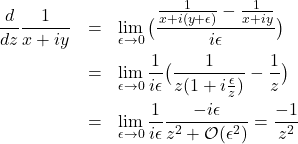

and along a different route;

(4)

A great deal can be said about the structure of analytic functions, and we will discover that their calculus is extremely simple. Consider a function ![]() , and imagine separating it into two functions, both real, one of them being the coefficient of all occurrances of

, and imagine separating it into two functions, both real, one of them being the coefficient of all occurrances of ![]() . Such a decomposition is called separating the real and imaginary parts of

. Such a decomposition is called separating the real and imaginary parts of ![]()

![]()

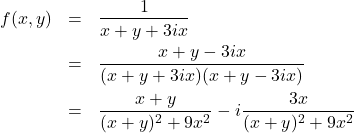

Consider

![]()

separate this into its real and imaginary parts

![]()

so

![]()

Examples

(5) ![]()

and so

![]()

(6)

therefore

![]()

Which of these functions are analytic, meaning, which possess unique derivatives at points ![]() in the plane?

in the plane?

Compute the derivative of ![]() first “horizontally”,

first “horizontally”, ![]() ;

;

![]()

![]()

and then “vertically”, ![]() ;

;

![]()

![]()

If these are supposed to be the same, then

![]()

These equations are called the Cauchy-Riemann conditions for analyticity at the point ![]() .

.

Integration

The very simplest of functions of a complex variable are called {\bf entire}, meaning that a power series such as

![]()

defines the function at all points in the complex plane. This requires that the power series have an infinite radius of convergence.

Most functions are not this simple, and we have encountered dozens of examples of rational functions that contain singularities; points where they blow up. If we take an arbitrary function ![]() , it could have an entire part

, it could have an entire part ![]() , as well as a partial fraction portion containing all of its singularities. Suppose that the function has a singularity at

, as well as a partial fraction portion containing all of its singularities. Suppose that the function has a singularity at ![]() .

.

We will refer to the principal part of ![]() for singularity

for singularity ![]() to be

to be

![Rendered by QuickLaTeX.com \[ p^{(0)}_f(z)={a^{(0)}_{-1}\over z-z_0}+{a^{(0)}_{-2}\over (z-z_0)^2}+\cdots +{a^{(0)}_{-N_0}\over (z-z_0)^N}\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-035c83ae03fd9c41a61222eeff7e5412_l3.png)

and in general a rational function could have many{\bf poles} ![]() , and so would have a general structure

, and so would have a general structure

![]()

We will now classify functions in a more detailed way.

If a function possesses a representation in terms of principal parts as illustrated above, with all of its principal parts containing a finite number of terms, we refer to the singularities as poles. If a function has a representation as illustrated above in which a principal part, say for pole ![]() , has an infinite number of negative power terms, we refer to

, has an infinite number of negative power terms, we refer to ![]() as an essential singularity. A singularity of

as an essential singularity. A singularity of ![]() at

at ![]() is called removable if

is called removable if ![]() is not defined there, but could be. For example the function

is not defined there, but could be. For example the function

![]()

can be defined to be ![]() at

at ![]() , thus removing the singularity at

, thus removing the singularity at ![]() .

.

Any single valued function that has no singularities other than poles at finite magnitude ![]() values is called meromorphic, with no regard to the behavior at infinity. These are the types of functions that we encounter most often in physics.

values is called meromorphic, with no regard to the behavior at infinity. These are the types of functions that we encounter most often in physics.

![]() is meromorphic. We could perform a partial fraction decomposition, and discover that the cosecant has only simple poles (poles of order one).

is meromorphic. We could perform a partial fraction decomposition, and discover that the cosecant has only simple poles (poles of order one).

![]() is entire, possessing an essential singularity, but it is at infinity.

is entire, possessing an essential singularity, but it is at infinity.

A function may possess a region of the complex plane upon which it is single valued and differentiable. We say that a function possessing such a region is regular in it. Any function that has a power series valid in the entire complex plane is regular in the entire plane, and is then deemed an entire function.

We will now list and “prove” or at least rationalize some very useful theorems on integration of functions in regions of regularity. We will use the fact that regularity means differentiability, and so the functions involved satisfy the Cauchy-Riemann conditions.

Remember that if

![]()

in which ![]() and

and ![]() are real functions, then for unique derivatives with respect to a single complex variable.

are real functions, then for unique derivatives with respect to a single complex variable.

![]() and

and ![]() must satisfy

must satisfy

![]()

and a function ![]() that satisfies this is called an analytic function, and these are the Cauchy-Riemann conditions.

that satisfies this is called an analytic function, and these are the Cauchy-Riemann conditions.

There are two “potentials” that can be constructed from the Cauchy-Riemann conditions. Number one is ![]()

![]()

which automatically satisfies the second C.R. condition by virtue of ![]() , and satisfies the first C.R. condition if

, and satisfies the first C.R. condition if ![]() . Number two is

. Number two is ![]()

![]()

which automatically satisfies the first C.R. condition and satisfies the second if ![]() .

.

We have established that any analytic function can be constructed from these two “harmonic” potentials

![]()

such that

![]()

Now consider any closed path ![]() lying entirely within a simply connect region of regularity, and integrate analytic

lying entirely within a simply connect region of regularity, and integrate analytic ![]() along this path. The result can be written in terms of the potentials

along this path. The result can be written in terms of the potentials ![]() and

and ![]() ;

;

![]()

![]()

Therefore both of these integrals should be zero, since a proper real-valued function returns to its original value at a point ![]() after we walk along a closed curve starting and ending at this point.

after we walk along a closed curve starting and ending at this point.

Not so fast. We have already encountered one function that returns to its original value only after twice circling the origin, ![]() , and another that never returns to its original value if the origin is fully circled, namely

, and another that never returns to its original value if the origin is fully circled, namely ![]() .

.

![]()

![]()

![]()

and there we have it;

integrals in the complex domain need to be evaluated keeping in mind that terms such as ![]() are very special, because of the nature of the Riemann surface of the log function. Technically the imaginary part of

are very special, because of the nature of the Riemann surface of the log function. Technically the imaginary part of ![]() is not an exact differential, because we cannot really construct a closed curve surrounding the singularity on its Riemann surface.

is not an exact differential, because we cannot really construct a closed curve surrounding the singularity on its Riemann surface.

At this point lets appeal to physics and consider a well-understood example, but in the context of complex integration. What does this all have to do with physics anyway? Consider for example a {\bf static} electric field, which is created by a line charge ![]() somewhere in space. We know that the electric field of a line charge is conservative; no work is done in moving a test charge through the field in a closed path. Imagine then that

somewhere in space. We know that the electric field of a line charge is conservative; no work is done in moving a test charge through the field in a closed path. Imagine then that

![]()

for such an electric field ![]() , then

, then

![]()

The real part of this expression is the work done in moving a unit test charge from ![]() to

to ![]() through this field!

through this field!

![]()

This will be zero if we let ![]() and close the path of integration, even if it surrounds the origin, the point through which we let our line charge pass

and close the path of integration, even if it surrounds the origin, the point through which we let our line charge pass

![]()

However in order for ![]() to be zero, we need to have

to be zero, we need to have

![]()

as well, but this is not true if the path of integration encloses the origin

![]()

notice that the polar angle ![]() has

has

![Rendered by QuickLaTeX.com \[d\theta'=dV={{dy\over x}-{y \, dx\over x^2}\over 1+{y^2\over x^2}}={x \, dy-y \, dx\over x^2+y^2}\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-9b025ef07d40ae1bf926953c4018687f_l3.png)

which does not exist at the oigin, and so we must remove the origin from its domain. Then

![]()

but we can do the integral in a second way and get a different answer

![]()

What went wrong?

Essentially our region in which our path of integration lies is not simply connected; the function ![]() is not defined at the origin, and so we must delete

is not defined at the origin, and so we must delete ![]() from the domain of integration, making it annular, which is not simply connected. If the path of integration was a circle that did not contain the origin (as in the figure below, then this last integral would have been zero and we would have

from the domain of integration, making it annular, which is not simply connected. If the path of integration was a circle that did not contain the origin (as in the figure below, then this last integral would have been zero and we would have ![]() .

.

![]()

![]()

What is the function ![]() ?

?

![]()

which is clearly regular in the off-center disk but not in one containing the origin.

Why is this electromagnetic recourse so useful? Consider a region containing an electrostatic field, but no charges. In such a region the electric field satisfies Gausse’s law with no charges, which we will later show means that the voltage ![]() satisfies

satisfies

![]()

a simple variable change ![]() ,

, ![]() gives us

gives us

![]()

which is true if

![]()

and so the voltage is a regular, analytic function in such a region!

An immediate corollary of our theorem is

Theorem.

For any simply connected domain of regularity;

![]()

where ![]() and

and ![]() are two curves both beginning on

are two curves both beginning on ![]() and ending on

and ending on ![]() .

.

This one is simple, take the two curves and combine them to make a closed curve ![]()

![]()

in which we go from ![]() to

to ![]() along

along ![]() in the first integral, but the wrong way on

in the first integral, but the wrong way on ![]() on the second, resulting in

on the second, resulting in

![]()

The most important regions in which to embed integration paths for our purposes will be annular regions surrounding singularies

made by adjoining two simply connected regions of regularity for a function ![]() .

.

Such a region has an inner bounding curve ![]() , an outer bounding curve

, an outer bounding curve ![]() , and between them a regular function could have a convergent partial fraction representation called a Laurent series

, and between them a regular function could have a convergent partial fraction representation called a Laurent series

![]()

however this region is not simply connected, and that is the source of all of the power.

Theorem. Consider any region of regularity for ![]() , simply connected or not. Let

, simply connected or not. Let ![]() and

and ![]() be any closed curves lying within that region, both surrounding the inner bounding curve of the domain of regularity.

be any closed curves lying within that region, both surrounding the inner bounding curve of the domain of regularity.

![]()

Why is this so important? It says that when doing a line integral in an annular region, the path of integration is immaterial, simply choose the one that is easiest to work with.}} All that matters in the end is the fact that the paths enclose poles of ![]() . The proof is simple. Consider the curve

. The proof is simple. Consider the curve ![]() to be the innermost, drawn here within the shaded region of regularity, and

to be the innermost, drawn here within the shaded region of regularity, and ![]() to be the outer.

to be the outer.

Connect them with pairs of bridges, dividing the region between them into two simply connected domains of regularity ![]() and

and ![]() . Then denoting the boundaries of

. Then denoting the boundaries of ![]() and

and ![]() by

by ![]() and

and ![]() respectively

respectively

![]()

However the integrations over the pairs of bridges, being arbitrarily close together, cancel, and so

![]()

in which we use ![]() to indicate that we are integrating around

to indicate that we are integrating around ![]() in the negative sense, and so

in the negative sense, and so

![]()

as we wanted to show.

Our final two theorems are what makes complex variables so powerful in integration, and in solving electromagnetism applications.

Theorem. A regular function on a region of regularity ![]() has its interior values determined entirely by the values of

has its interior values determined entirely by the values of ![]() on the boundary of the region of regularity

on the boundary of the region of regularity

![]()

in electromagnetism, this is called the Mean Value theorem, and is used to compute voltages in charge free volumes numerically by relaxation techniques.

We will “prove” this theorem in a way that you will emulate in nearly all computational situations. First let’s write

![]()

and invoke the previous theorem; the path of integration is immaterial, deform the contour to a simple one; a circle ![]() of very small radius around

of very small radius around ![]()

![]()

![]()

for which

![]()

then

![]()

![]()

and use the fact that our function, being regular in this domain, possesses a valid power series around ![]() ;

;

![]()

![]()

Put these into our last integral, and shrink ![]() down to nothing, all of these integrals become zero

down to nothing, all of these integrals become zero

![]()

![]()

since each one contains a positive power of ![]() .

.

Our final theorem is the most powerful of all, and enables us to not only do the most fantastic feats of integration, but can also be used to extract information of all types from functions, including their periodicity properties, and asymptotic behaviors.

Cauchy’s Theorem.

Let the curve ![]() lie within an annular region of regularity for some function

lie within an annular region of regularity for some function ![]() . Then

. Then

![]()

in which ![]() is the coefficient of the term

is the coefficient of the term ![]() in the principal part of

in the principal part of ![]() for pole

for pole ![]() . These are called the {\bf residues} of the function, and this is often referred to as the residue theorem.

. These are called the {\bf residues} of the function, and this is often referred to as the residue theorem.

The proof is almost trivial; Since the shape of the contour is irrelevant as long as it encloses the pole, let ![]() be a circle of radius

be a circle of radius ![]() around the pole of

around the pole of ![]() at

at ![]() , then

, then ![]() ,

, ![]() and the function

and the function ![]() possesses a Laurent series in the annular region surrounding the pole, the region in which

possesses a Laurent series in the annular region surrounding the pole, the region in which ![]() is drawn.

is drawn.

![]()

![]()

and is ![]() if

if ![]() . Only the residue term survives the integration

. Only the residue term survives the integration

![]()

Why is this so powerful? It reduces the difficult problem of integration to simply finding certain coefficients of a functions Laurent series. To evaluate integrals, we simple compute residues at poles ![]()

![]()