These are excerpts from a 300-level course that I taught for a Math department from the excellent book “A First Course in Probability” by Sheldon Ross. Hopefully they will provide you with all that you need for Physics 715 and to understand applications in machine learning.

Consider a sample space ![]() comprised of events or objects, and subsets

comprised of events or objects, and subsets ![]() and

and ![]() such that

such that ![]() .

.

Example

Consider three coin tosses, all independent. What is the sample space ![]() of outcomes?

of outcomes?

To solve this you must generate all possible outcomes, by simply dreaming them up from experience,

![]()

or by using a {\bf cominatorial} counting or generating function

![]()

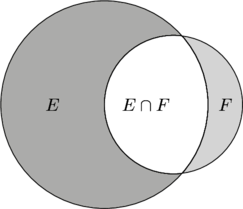

We define the union of two subsets ![]() and

and ![]() of

of ![]() to be the collection of elements of

to be the collection of elements of ![]() that are either in

that are either in ![]() , or in

, or in ![]() , or in both

, or in both ![]() and

and ![]() , and denote the union as

, and denote the union as ![]() . The collection of events both in

. The collection of events both in ![]() and

and ![]() we call the intersection and denote this one of two ways;

we call the intersection and denote this one of two ways;

![]()

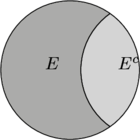

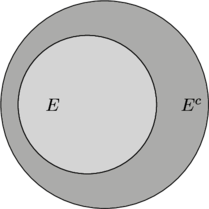

The collection of events in ![]() but not in

but not in ![]() we call the complement of

we call the complement of ![]() and denote it as

and denote it as ![]() .

.

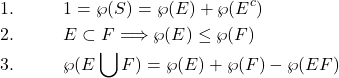

Then we have two extremely useful and important statements

![]()

both of which you can prove with Venn diagrams. Notice that ![]() and

and ![]() are mutually exclusive sets; they have an empty intersection

are mutually exclusive sets; they have an empty intersection

![]()

Unions and intersections can be shown via Venn diagrams to satisfy basic properties of algebraic binary operations, such as associativity, commutativity and distribution property

![]()

![]()

![]()

(it would be a good exercise to draw Venn diagrams illustrating these points).

Consider a sample space ![]() and an experiment whose outcome is always some element of

and an experiment whose outcome is always some element of ![]() . Repeat the experiment many times under identical conditions. Let

. Repeat the experiment many times under identical conditions. Let ![]() be the number of times in the first

be the number of times in the first ![]() repetitions that the result

repetitions that the result ![]() we get outcome

we get outcome ![]() . We define the probability of this outcome as

. We define the probability of this outcome as

![]()

Example. Toss three coins, what is the probability of ![]() heads in any order? From the previous page we see

heads in any order? From the previous page we see ![]() and

and ![]() , so

, so ![]() .

.

Example. The Gibbs microcanonical distribution. All possible states of a system of energy ![]() , which of course is

, which of course is ![]() , are equally likely, so the probability that the system is in any one of these states

, are equally likely, so the probability that the system is in any one of these states ![]() of energy

of energy ![]() is

is

![]()

Fundamental properties of probabilities can be derived from the following propositions, all of which are fairly simple to prove:

(1)

Proof.

![]()

this is certainly true since the two sets are mutually exclusive. However ![]() and so

and so

![]()

again since both of these sets are mutually exclusive. This implies that

![]()

and we simply insert this into the first line of the proof, thereby proving 3.

Example An urn contains 3 red, 7 black balls. Players A and B draw balls from the urn consecutively until a red ball is drawn. Find the probability that A selects the red ball. Note that the balls are not replaced in the urn.

The prob of success on the first try for A is ![]() , of failure

, of failure ![]() . Probability of success by B is

. Probability of success by B is ![]() and failure is

and failure is ![]() . Success on the second try for A is contingent upon failure at the first of A followed by failure of B, ,

. Success on the second try for A is contingent upon failure at the first of A followed by failure of B, , ![]() since at that stage two of the balls have been removed. Continue, first success at each drawing are mutually exclusive (such probabilities add)

since at that stage two of the balls have been removed. Continue, first success at each drawing are mutually exclusive (such probabilities add)

![]()

![]()

Example An urn contains 5 red, 6 blue balls and 8 green balls. If a set of 3 is randomly selected, what is the probability that each will be the same color? Repeat with the assumption that when drawn a balls color is noted and then it is put back into the urn.

Probability that all three drawn are same color;

![]()

![]()

With replacement;

![]()

Example

Urn A contains 3 red and 3 black balls, urn B contains 4 red and 6 black balls. If a ball is selected from each urn, what is the probability that they will be the same color?

![]()

Example

A town has 4 TV repairmen. If 4 sets break down, what is the probability that exactly ![]() of the repairmen will be called, for

of the repairmen will be called, for ![]() .

.

![]() repairmen,

repairmen, ![]() service calls, sample space the routing of the service calls,

service calls, sample space the routing of the service calls, ![]() possible routings

possible routings

![]()

![]()

Let’s use a generating function (a partition function). Count up sum of coefficients of terms in which only two of the repairman symbols ![]() occur

occur ![]() or terms in which

or terms in which ![]() of the four symbols appear;

of the four symbols appear; ![]() .

.

![]()

![Rendered by QuickLaTeX.com \[=\sum_{a,b,c,d}{4!\over a!b!c!d!}x^a y^bz^cw^d, \quad 4^4\cdot \wp(n)=\left\{\begin{array}{ll} 1 & 4{4!\over 4!0!0!0!}\\ 2 & {4\cdot 3\over 2}{4!\over 2!2!0!0!}+4\cdot 3 {4!\over 3!1!0!0!}\\ 3 & 4\cdot 3{4!\over 2!1!1!0!}\\ 4 & {4!\over 1!1!1!1!}\end{array}\right.\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-599361d954582ef2c4f348b8e79e2a8b_l3.png)

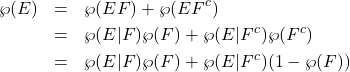

Conditional probability

We define the conditional probability ala’ Kolmogorov that ![]() will occur given that

will occur given that ![]() has already occurred as

has already occurred as ![]() (conditioning on

(conditioning on ![]() ). Notice that

). Notice that

![]()

in general. This can also be taken to be an axiom of probability theory.

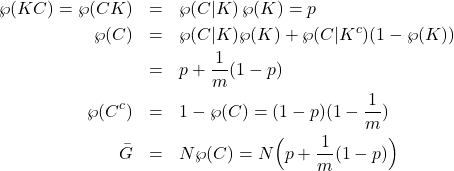

Example Suppose that on a multiple choice exam in which each question has ![]() possible choices, a student has a probability

possible choices, a student has a probability ![]() of knowing (

of knowing (![]() ) the answer to a problem , and a probability of

) the answer to a problem , and a probability of ![]() of guessing the correct answer if he or she must guess. Let

of guessing the correct answer if he or she must guess. Let ![]() be the probability that the student gets a problem correct. Let

be the probability that the student gets a problem correct. Let ![]() be the probability that the student actually knows the correct answer. Then it is clear that

be the probability that the student actually knows the correct answer. Then it is clear that

![]()

since the student will mark it correct if they know the answer, and must guess if they do not.

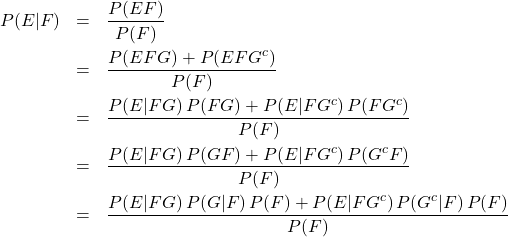

The fundamental tools for working with conditional probabilities are

![]()

![]()

which implies

![]()

and

![]() Bayes’ Theorem

Bayes’ Theorem

(2)

Example Under the conditions of the previous example, what is the probability that a student actually knows the answer if he or she did answer it correctly? This is a standard course-in-probability exam problem.

![]()

![]()

For the record, lets compute a few other quantities;

(3)

where the last quantity is the average expected grade on an exam with ![]() multiple choice questions.

multiple choice questions.

We say that two events ![]() and

and ![]() are independent if

are independent if

![]()

Example Consider an experiment in which independent trials, each with a probability of success equal to ![]() , will be performed in success. If

, will be performed in success. If ![]() trials are performed, find the probability that there will be at least one success among them.

trials are performed, find the probability that there will be at least one success among them.

![]()

let each ![]() be failure;

be failure;

![]()

and so the probability of at least one success is

![]()

Since each event is independent.

Example Prove that if ![]() , for

, for ![]() then\\

then\\ ![]() .

.

Start with

![]()

![]()

just multiply all of these together

![]()

Example Prove that if ![]() are independent events, then

are independent events, then ![]() .

.

This follows from inclusion/exclusion, or from

![]()

for independent events, with

![]()

and ![]() .

.

Example Prove directly that ![]() .

.

(4)

Example Three cards are randomly drawn, without replacement from a deck of 52 playing cards. Find the conditional probability that the first card is a spade, given that the second and third cards are spades.

![]()

![]()

![]()

Example Suppose that ![]() of men and

of men and ![]() of women are colorblind. A colorblind person is chosen at random from a population containing equal numbers of men and women. What is the probability of it being a man?

of women are colorblind. A colorblind person is chosen at random from a population containing equal numbers of men and women. What is the probability of it being a man?

![]() ,

, ![]() ,

, ![]() ;

;

![]()

Conditional probabilities in quantum mechanics

Start with ![]() and consider measurement with

and consider measurement with ![]() , with eigenspace

, with eigenspace ![]() . This is a complete basis of

. This is a complete basis of ![]() so that

so that

![]()

Consider operator ![]() , with eigenspace

, with eigenspace ![]() . We can consider

. We can consider

![]()

to be the conditional probability that if the state of the system is one of definite ![]() (namely

(namely ![]() ) then measurement of

) then measurement of ![]() results in

results in ![]() . Summing over all conditional probabilities gives the total probability that measurement of

. Summing over all conditional probabilities gives the total probability that measurement of ![]() results in

results in ![]()

![]()

![]()

Baye’s theorem

![]()

let’s us construct conditional density matrices; if the system is in state ![]() (eigenstate of

(eigenstate of ![]() ) before an

) before an ![]() measurement is made, after measuring

measurement is made, after measuring ![]() and getting

and getting ![]() the system is in state

the system is in state

![]()

and summing over initial pure states (using Hermiticity of ![]() and cancellation of some phase factors)

and cancellation of some phase factors)

![]()

![Rendered by QuickLaTeX.com \[=\sum_j {\wp_j \wp_{(a|j)}\over \wp_a}{\hat{P}_a|j\rangle\over \sqrt{\wp_{(a|j)}}}{\langle j|\hat{P}_a\over \sqrt{\wp_{(a|j)}}}=\sum_j {\wp_j \over \wp_a}\hat{P}_a|j\rangle\langle j|\hat{P}_a\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-e1027644a42dadfeb7e29e59f1588de5_l3.png)

![]()