Binomial mass distribution

For a discrete random variable ![]() define the probability mass function

define the probability mass function

![]()

If ![]() can be only one of

can be only one of ![]() then

then ![]() for

for ![]() and

and ![]() if

if ![]() . We also must have

. We also must have ![]() .

.

A single Bernoulli random variable is one for which ![]() (success) and

(success) and ![]() (failure).

(failure).

We construct the generating function (in statistical physics this is the partition function)for multiple trials of a single Bernoulli process (such as shooting arrows at a bullseye) using ![]() as a simple place-holder or enumerator

as a simple place-holder or enumerator ![]()

![]()

and

![]()

Then since all trials are independent, all probabilities are multiplicative and

![]()

![]()

This should look very familiar; this is the binomial distribution. Note that in a generating function the coefficient of ![]() the the probability of event characterized by

the the probability of event characterized by ![]() .

.

Bernoulli-binomial random variable. The Bernoulli random variable ![]() (a non-negative integer) is also called the binomial. Calling probability mass function

(a non-negative integer) is also called the binomial. Calling probability mass function ![]() , and

, and ![]()

![]()

gives the probability of ![]() successes and

successes and ![]() failures in

failures in ![]() independent trials with individual success probability

independent trials with individual success probability ![]() . Note that (if

. Note that (if ![]() )

)

![Rendered by QuickLaTeX.com \[\sum_{i=0}^n p(i)=\sum_{i=0}^n {n\choose i} \, p^i \, (1-p)^{n-i}=(1-p)^n\sum_{i=0}^n {n\choose i} \, \Big({p\over 1-p}\Big)^i\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-75334c7a9aee3dbc0ca2ba58e25f46d7_l3.png)

![]()

Example. An archer has a probability of making a bull’s eye of ![]() with each shot. What is her average score in a set of six shots?

with each shot. What is her average score in a set of six shots?

The probability of ![]() bull’s eyes in the set is

bull’s eyes in the set is

![]()

so her average will be

![Rendered by QuickLaTeX.com \[\bar{m}=\sum_{m=0}^6 m \cdot \wp(m)= \sum_{m=0}^6 m \cdot{6\choose m} (1/3)^m(2/3)^{6-m}=2\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-76a0b9ff2fabc86d4d581ef1bd963334_l3.png)

You can see that performing the sum ![]() could be mathematically difficult if

could be mathematically difficult if ![]() is complicated.

is complicated.

Example The probability that a given atom out of ![]() trapped in volume

trapped in volume ![]() will be in subvolume

will be in subvolume ![]() is

is ![]() ,

, ![]() . This was part of a qualifier problem.

. This was part of a qualifier problem.

Geometric random variable Consider independent trials, each with a success probability ![]() , are performed until success occurs.

, are performed until success occurs. ![]() is the number of trials required until success,

is the number of trials required until success,

![]()

Note that

![Rendered by QuickLaTeX.com \[\wp(X\ge n)=p\sum_{m=n}^\infty (1-p)^{m-1}=p\sum_{q=0}^\infty (1-p)^{n-1+q}\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-8ffcc0f2ac09e124719abd832e4a4197_l3.png)

![Rendered by QuickLaTeX.com \[=p(1-p)^{n-1}\sum_{q=0}^\infty (1-p)^q=(1-p)^{n-1}\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-21e0b314b19020dd4eaf3a608b9754c5_l3.png)

![]() is a geometric random variable. A distribution is memory-less if

is a geometric random variable. A distribution is memory-less if ![]() . The only distribution taking values in the positive integers that is memory-less is the geometric, since

. The only distribution taking values in the positive integers that is memory-less is the geometric, since

![]()

![]()

and clearly ![]() satisfies this.

satisfies this.

Poisson random variable The Poisson variable ![]() (a non-negative integer) is

(a non-negative integer) is

![]()

This is the limit of ![]() and

and ![]() ,

, ![]() of the Bernoulli variable.

of the Bernoulli variable.

Consider the simplest experiment that can be conceived of; within a vanishingly small time interval ![]() , a process either occurs (such as a nuclear decay), with probability

, a process either occurs (such as a nuclear decay), with probability ![]() , or does not, with probability

, or does not, with probability ![]() . The probability

. The probability ![]() that within a macroscopic time

that within a macroscopic time ![]() , that

, that ![]() such events occur, is generated by

such events occur, is generated by

![Rendered by QuickLaTeX.com \[(pt +(1-p))^N =\phi(t)=\sum_{M=0}^N {N\choose M} p^M (1-p)^{N-M} \, t^M\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-04f0792f554bff7697ceba799788b151_l3.png)

![Rendered by QuickLaTeX.com \[=\sum_{M=0}^N \wp(M) \, t^M\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-f5254ed955de18f0ef4a0475de75b335_l3.png)

resulting in the binomial distribution

![]()

Notice that we say ![]() rather than

rather than ![]() for a discrete distribution.

for a discrete distribution.

We call ![]() the generating function of the distribution, which is very useful in obtaining moments.

the generating function of the distribution, which is very useful in obtaining moments.

The expectation or mean of this distribution is

![Rendered by QuickLaTeX.com \[\mu=\sum_{M=0}^N M \, \wp(M) =\Big(\sum_{M=0}^N \wp(M) \, t^M\Big)_{t=1}\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-655939a517943554072deef3ce1cf549_l3.png)

![Rendered by QuickLaTeX.com \[=\Big(t{d\over dt}\sum_{M=0}^N \wp(M) \, t^M\Big)_{t=1}\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-c39b6b76538446ffbaade56659ded4b2_l3.png)

![]()

This PDF has two important limits.

![]() First if we consider the case of

First if we consider the case of ![]() but with

but with ![]() such that

such that ![]() remains constant, we get

remains constant, we get

![]()

and then

![]()

results in the Poisson distribution

![]()

with parameter ![]() .

.

Example The probability that an ISP gets a call from a subscriber in any given hour is ![]() . The ISP has

. The ISP has ![]() subscribers. What is the probability that the ISP will get

subscribers. What is the probability that the ISP will get ![]() calls in the next hour?

calls in the next hour?

First we see that the most probable number of calls is

![]()

in an hour. Then

![]()

![]() The second important limit of the binomial theorem is used when we want to explore the region around the maximum value

The second important limit of the binomial theorem is used when we want to explore the region around the maximum value ![]() , which is the most probable value. We will expand the natural log of the distribution around

, which is the most probable value. We will expand the natural log of the distribution around ![]() by treating the discrete variable

by treating the discrete variable ![]() as if it were continuous, calling it

as if it were continuous, calling it ![]() for consistency with our previous examples;

for consistency with our previous examples;

![]()

![]()

however the second term vanishes since ![]() is an extreme point of the distribution. Take the logarithm of the probability

is an extreme point of the distribution. Take the logarithm of the probability

![]()

To evaluate the derivatives we use Stirling’s approximation

![]()

![]()

and so

![]()

![]()

which we can normalize via

![]()

which we recognize as the Bell curve (the Normal distribution), peaked around ![]() with standard deviation

with standard deviation ![]() . Notice that the Maxwell-Boltzmann distribution is a normal distribution in velocity.

. Notice that the Maxwell-Boltzmann distribution is a normal distribution in velocity.

Fun fact: if ![]() , then

, then ![]() .

.

Example (old format qualifier problem) A researcher performs counting of nuclear decays from a sample, making ten counts for ten seconds each, getting

![]()

counts. For how many seconds should she count in order to measure the decay rate accurate to ![]() ?

?

Nuclear decays are Poissonian random deviates. We want to have ![]() , so we should count long enough so that the average number of counts gotten is

, so we should count long enough so that the average number of counts gotten is ![]() . We are getting

. We are getting ![]() now, so we should count for

now, so we should count for ![]() ,

, ![]() .

.

Example (Gibbs distribution in Physics 415/715).

Let the universe ![]() consist of a system

consist of a system ![]() and a reservoir

and a reservoir ![]() , so

, so ![]() . The reservoir

. The reservoir ![]() has

has ![]() boxes that can each hold

boxes that can each hold ![]() or

or ![]() particle, and the system

particle, and the system ![]() has

has ![]() such boxes. How many states does a universe containing

such boxes. How many states does a universe containing ![]() particles have?

particles have?

The number of ways that ![]() objects can be put into

objects can be put into ![]() locations is

locations is

![]()

which is the coefficient of ![]() in

in

![Rendered by QuickLaTeX.com \[(1+t)^{N_r}(1+t)^{N_s}=(1+t)^{N_r+N_s}=\sum_{m=0}^{N_s+N_r}{N_s+N_r\choose m}t^m\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-f39323b1715ed4d028391aae4c885ad8_l3.png)

Carefully expand the left side;

![Rendered by QuickLaTeX.com \[(1+t)^{N_r}(1+t)^{N_s}=\Big(\sum_{p=0}^{N_r}{N_r\choose p}t^p\Big)\Big(\sum_{q=0}^{N_s}{N_s\choose q}t^q\Big)\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-269a88d8534dda3669fdde7b20e9b7d6_l3.png)

![]()

By comparing these two expressions and picking out the coefficient of ![]() on each side we obtain

on each side we obtain

![Rendered by QuickLaTeX.com \[\Omega(N)={(N_s+N_r)!\over N!(N_s+N_r-N)!}=\sum_{p+q=N}{N_r\choose p}{N_s\choose q}\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-99622ce75a0dfd70224ae702198293ca_l3.png)

![Rendered by QuickLaTeX.com \[=\sum_{q=0^N}{N_r\choose N-q}{N_s\choose q}=\sum_{q=0}^N \Omega_r(N-q)\Omega_s(q)\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-2f835de5a5f3e86126bfc1378d359cee_l3.png)

where ![]() is the number of states of the system

is the number of states of the system ![]() when populated with

when populated with ![]() particles and

particles and ![]() is the number of states of the reservoir

is the number of states of the reservoir ![]() when populated with

when populated with ![]() particles. These are mass functions since

particles. These are mass functions since ![]() is a discrete variable, we would use

is a discrete variable, we would use ![]() if

if ![]() were continuous.

were continuous.

What is the probability that ![]() contains exactly

contains exactly ![]() particles?

particles?

This will be the ratio of the number of states with ![]() particles in

particles in ![]() and

and ![]() in

in ![]() to the total number of states

to the total number of states

![Rendered by QuickLaTeX.com \[\wp(m)={{N_r\choose N-m}{N_s\choose m}\over {N_s+N_r\choose N}}\]](http://abadonna.physics.wisc.edu/wp-content/ql-cache/quicklatex.com-48b6a72c7668df252c4c6488f5e1fc37_l3.png)

The Boltzmann entropy of the system containing ![]() particles is

particles is ![]() , and {\bf Boltzmann’s maximal entropy principle} says that the equilibrium population

, and {\bf Boltzmann’s maximal entropy principle} says that the equilibrium population ![]() is the most probable. Use Stirling’s formula to evaluate this: maximize the entropy with respect to

is the most probable. Use Stirling’s formula to evaluate this: maximize the entropy with respect to ![]()

![]()

taking anti-logs

![]()

we obtain the result that the most likely distribution of particles between system ![]() and reservoir

and reservoir ![]() is the one that makes their {\bf densities}

is the one that makes their {\bf densities} ![]() and

and ![]() equal! Define the {\bf chemical potential}

equal! Define the {\bf chemical potential}

![]()

which represents the work needed to move a particle from ![]() to

to ![]() when

when ![]() and

and ![]() are in thermal and matter equilibrium, and

are in thermal and matter equilibrium, and ![]() is at temperature

is at temperature ![]() . Then for

. Then for ![]()

![]()

![]()

This is an interesting and exact result. Notice that the equilibrium condition says equilibrium between ![]() and

and ![]() occurs when

occurs when

![]()

then there is no energy cost to move particles from ![]() to

to ![]() . Another observation is that if

. Another observation is that if ![]() , then the density of

, then the density of ![]() (the number of particles in

(the number of particles in ![]() ) is low. Under those conditions matter would migrate from

) is low. Under those conditions matter would migrate from ![]() into

into ![]() until

until ![]() , matter migrates from high to low

, matter migrates from high to low ![]() .

.

Stirling’s approximation

The gamma function is an analytic continuation of the factorial to non-integer values

![]()

If ![]() an integer, then

an integer, then ![]() , which is easy to prove by integration by parts or by induction.

, which is easy to prove by integration by parts or by induction.

Recall

![]()

![]()

find the ![]() -value

-value ![]() that makes

that makes ![]() minimal, it contributes the most to the integral, and expand

minimal, it contributes the most to the integral, and expand ![]() around it

around it

![]()

![]()

put this back into ![]() , and use the integral

, and use the integral ![]() to obtain an approximate formula for

to obtain an approximate formula for ![]()

![]()

when ![]() is very large. Finally you will need to use

is very large. Finally you will need to use

![]()

for ![]() very large.

very large.

Cumulants

Let ![]() denote an event

denote an event ![]() . Cumulative distribution function

. Cumulative distribution function

![]()

is non-decreasing, approaches ![]() for

for ![]() and

and ![]() for

for ![]() and is right-continuous

and is right-continuous

![]()

Note that

![]()

![]()

or

![]()

and calculation that ![]() is a simple limit

is a simple limit

![]()

![]()

Example (Problem 4.1)

Two balls are randomly chosen from an urn containing 8 white, 4 black and 2 orange balls. You win ![]() dollar for each black, and lose

dollar for each black, and lose ![]() dollar for each white selected. Let

dollar for each white selected. Let ![]() denote your winnings. What are the possible values of

denote your winnings. What are the possible values of ![]() , and find the mass function for the distribution of this variable.

, and find the mass function for the distribution of this variable.

Probability of ![]() white (-2):

white (-2): ![]() , probability of

, probability of ![]() black (4):

black (4): ![]() , probability of

, probability of ![]() white no black (-1):

white no black (-1): ![]() , probability of

, probability of ![]() black no white (2):

black no white (2): ![]() , probability of no white no black (0):

, probability of no white no black (0): ![]() , probability of

, probability of ![]() white

white ![]() black (1):

black (1): ![]() .

.

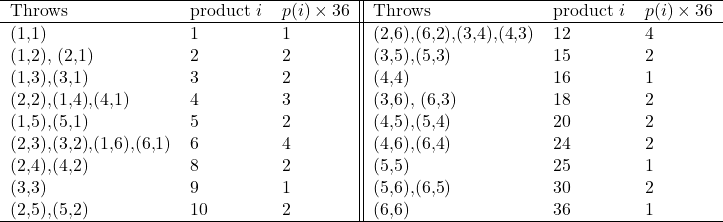

Example

Two fair dice are rolled. Let ![]() equal the product of the values. Compute

equal the product of the values. Compute ![]() for each

for each ![]() .

.

Example

Suppose that the number of accidents occurring on a highway each day is a Poisson random variable with parameter ![]() .

.

A. Find the probability of 3 or more accidents per day.

B. Find the probability of 3 or more accidents per day provided at least one accident has occurred.

![]()

![]()

Let ![]() be the even of

be the even of ![]() or more accidents, and

or more accidents, and ![]() the event of

the event of ![]() or more accidents

or more accidents

![]()

![]()

Example

If you buy a lottery ticket in ![]() lotteries, in each of which your chances of winning a prize is

lotteries, in each of which your chances of winning a prize is ![]() , what is the probability that you will win a prize at least once, exactly once, at east twice?

, what is the probability that you will win a prize at least once, exactly once, at east twice?

This is the limit of a binomial (Bernoulli) random number; many trials each with small probability

![]()

![]()

![]()

![]()

![]()

Example

From the generic grand canonical partition function for identical particles with single-particle partition functions ![]() determine the distribution function for the number of particles

determine the distribution function for the number of particles ![]() in the system when in equilibrium with a heat and particle reservoir. What is

in the system when in equilibrium with a heat and particle reservoir. What is ![]() , the average value?

, the average value?

![]()

![]()

![]()

This is a common qualifier problem these days.